An Empirical Analysis of Encoder-Decoder (U-Net) Variants for Medical Image Segmentation

DOI:

https://doi.org/10.26438/ijsrcse.v13i1.610Keywords:

Medical Image Segmentation, U-Net variants, Brain MRI, Breast Ultrasound, ResU-Net, Encoder-Decoder, Image processing, Computer VisionAbstract

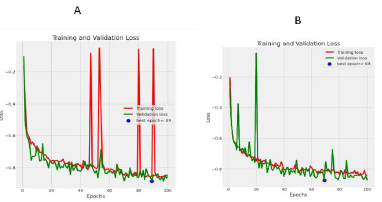

U-Net convolutional neural networks have become a cornerstone in medical image processing, particularly for complex segmentation tasks. However, with the proliferation of various U-Net variants, it is imperative to evaluate their performance across diverse medical datasets to determine the most suitable architecture for specific applications. This study presents a comprehensive empirical analysis of six U-Net variants namely; U-Net, U-Net++, ResU-Net, TransU-Net, V-Net, and U-Net 3+, focusing on their effectiveness in medical image segmentation. The research used two publicly available medical image datasets: the Breast Cancer Ultrasound dataset and the Brain MRI FLAIR dataset. These datasets were selected to represent a range of challenges in medical imaging, including varying levels of noise, contrast, color channels, and lesion visibility. Each U-Net variant was trained and evaluated under consistent experimental conditions, with the Dice Similarity Coefficient (DSC), Jaccard Index and accuracy serving as the primary evaluation metrics. The findings reveal that while all U-Net variants exhibit strong performance, ResU-Net consistently outperformed other architectures across both datasets. Specifically, ResU-Net achieved the highest Jaccard Index and DSC values, recording 0.5158 and 0.5930 for the Breast Cancer Ultrasound dataset and 0.7923 and 0.8730 for the Brain MRI dataset, respectively. Additionally, ResU-Net demonstrated the highest accuracy of 96.20% in the Breast Ultrasound dataset and emerged as the first runner-up in the Brain MRI dataset with an accuracy of 99.70%, trailing slightly behind U-Net++, which achieved 97.72%. Conversely, U-Net 3+ registered the lowest performance in both datasets. ResU-Net's superior performance suggests that its residual connections enhance feature propagation and mitigate the vanishing gradient problem, leading to better segmentation outcomes. Conversely, U-Net 3+ showed lower performance, indicating that complexity doesn’t always equate to better results. This study offers insights into the strengths and weaknesses of different U-Net variants, guiding practitioners in choosing the most suitable model for their specific applications especially in medical image processing domain.

References

R. Azad et al., “Medical Image Segmentation Review: The Success of U-Net,” IEEE Trans. Pattern Anal. Mach. Intell., pp. 1–20, 2024, doi: 10.1109/TPAMI.2024.3435571.

R. Wang, T. Lei, R. Cui, B. Zhang, H. Meng, and A. K. Nandi, “Medical image segmentation using deep learning: A survey,” IET Image Process., vol. 16, no. 5, pp. 1243–1267, 2022, doi: 10.1049/ipr2.12419.

J. Kamiri, G. M. Wambugu, and A. M. Oirere, “Multi-task Deep Learning in Medical Image Processing: A Systematic Review,” Int. J. Comput. Sci. Res., vol. 9, pp. 3418–3440, Jan. 2025.

R. Azad et al., “Medical Image Segmentation Review: The Success of U-Net,” IEEE Trans. Pattern Anal. Mach. Intell., pp. 1–20, 2024, doi: 10.1109/TPAMI.2024.3435571.

T. Zhou, S. Ruan, and S. Canu, “A review: Deep learning for medical image segmentation using multi-modality fusion,” Array, vol. 3–4, p. 100004, Sep. 2019, doi: 10.1016/j.array.2019.100004.

Z. Guo, X. Li, H. Huang, N. Guo, and Q. Li, “Deep Learning-Based Image Segmentation on Multimodal Medical Imaging,” IEEE Trans. Radiat. Plasma Med. Sci., vol. 3, no. 2, pp. 162–169, Mar. 2019, doi: 10.1109/TRPMS.2018.2890359.

O. Ronneberger, P. Fischer, and T. Brox, “U-Net: Convolutional Networks for Biomedical Image Segmentation,” Springer International Publishing, 2015, pp. 234–241. doi: 10.1007/978-3-319-24574-4_28.

W. Ehab and Y. Li, “Performance Analysis of UNet and Variants for Medical Image Segmentation,” arXiv.org.

A. K. Sahu, P. K. Gupta, A. K. Singh, K. Singh, and U. Kumar, “A Convolutional Neural Network Approach for Detecting the Distracted Drivers,” Int. J. Sci. Res. Comput. Sci. Eng., vol. 11, no. 1, pp. 1–6, Feb. 2023.

N. Siddique, S. Paheding, C. P. Elkin, and V. Devabhaktuni, “U-Net and Its Variants for Medical Image Segmentation: A Review of Theory and Applications,” IEEE Access, vol. 9, pp. 82031–82057, 2021, doi: 10.1109/ACCESS.2021.3086020.

A. H. Alharbi, C. V. Aravinda, M. Lin, P. S. Venugopala, P. Reddicherla, and M. A. Shah, “Segmentation and Classification of White Blood Cells Using the UNet,” Contrast Media Mol. Imaging, vol. 2022, no. 1, p. 5913905, 2022, doi: 10.1155/2022/5913905.

Z. Zhou, M. M. Rahman Siddiquee, N. Tajbakhsh, and J. Liang, “UNet++: A Nested U-Net Architecture for Medical Image Segmentation, Springer International Publishing, 2018, pp. 3–11. doi: 10.1007/978-3-030-00889-5_1.

N. Micallef, D. Seychell, and C. J. Bajada, “Exploring the U-Net++ Model for Automatic Brain Tumor Segmentation,” IEEE Access, vol. 9, pp. 125523–125539, 2021, doi: 10.1109/ACCESS.2021.3111131.

K. He, X. Zhang, S. Ren, and J. Sun, “Deep Residual Learning for Image Recognition,” in 2016 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Las Vegas, NV, USA: IEEE, Jun. 2016, pp. 770–778. doi: 10.1109/CVPR.2016.90.

M. W. Sabir et al., “Segmentation of Liver Tumor in CT Scan Using ResU-Net,” Appl. Sci., vol. 12, no. 17, Art. no. 17, Jan. 2022, doi: 10.3390/app12178650.

C. Chen, L. Jing, H. Li, and Y. Tang, “A New Individual Tree Species Classification Method Based on the ResU-Net Model,” Forests, vol. 12, no. 9, Art. no. 9, Sep. 2021, doi: 10.3390/f12091202.

J. Chen et al., “TransUNet: Transformers Make Strong Encoders for Medical Image Segmentation,” Feb. 08, 2021, arXiv: arXiv:2102.04306. doi: 10.48550/arXiv.2102.04306.

A. Vaswani et al., “Attention is All you Need,” in Advances in Neural Information Processing Systems, Curran Associates, Inc., 2017.

F. Milletari, N. Navab, and S.-A. Ahmadi, “V-Net: Fully Convolutional Neural Networks for Volumetric Medical Image Segmentation,” Jun. 15, 2016, arXiv: arXiv:1606.04797. doi: 10.48550/arXiv.1606.04797.

H. Huang et al., “UNet 3+: A Full-Scale Connected UNet for Medical Image Segmentation,” Apr. 19, 2020, arXiv: arXiv:2004.08790. doi: 10.48550/arXiv.2004.08790.

W. Al-Dhabyani, M. Gomaa, H. Khaled, and A. Fahmy, “Dataset of breast ultrasound images,” Data Brief, vol. 28, p. 104863, Feb. 2020, doi: 10.1016/j.dib.2019.104863.

M. Buda, A. Saha, and M. A. Mazurowski, “Association of genomic subtypes of lower-grade gliomas with shape features automatically extracted by a deep learning algorithm,” Comput. Biol. Med., vol. 109, pp. 218–225, Jun. 2019, doi: 10.1016/j.compbiomed.2019.05.002.

O. A. Montesinos López, A. Montesinos López, and J. Crossa, “Fundamentals of Artificial Neural Networks and Deep Learning,” Springer International Publishing, 2022, pp. 379–425. doi: 10.1007/978-3-030-89010-0_10.

Y. LeCun, Y. Bengio, and G. Hinton, “Deep learning,” Nature, vol. 521, no. 7553, pp. 436–444, May 2015, doi: 10.1038/nature14539.

F. Radicchi, D. Krioukov, H. Hartle, and G. Bianconi, “Classical information theory of networks,” J. Phys. Complex., vol. 1, no. 2, p. 025001, Jul. 2020, doi: 10.1088/2632-072X/ab9447.

J. Kamiri, G. M. Wambugu, and A. Oirere, “A Systematic Literature Review of Meta-Learning Models for Classification Tasks,” 2022, doi: 10.7753/IJCATR1103.1002.

Y. Bengio, J. Louradour, R. Collobert, and J. Weston, “Curriculum learning,” in Proceedings of the 26th Annual International Conference on Machine Learning, Montreal Quebec Canada: ACM, Jun. 2009, pp. 41–48. doi: 10.1145/1553374.1553380.

A. Vaswani et al., “Attention is All you Need,” in Advances in Neural Information Processing Systems, Curran Associates, Inc., 2017. Accessed: Jan. 23, 2024.

S. K. Wanjau and S. N. Thiiru, “A Reinforcement Learning-Based Multi-Agent System for Advanced Network Attack Prediction,” Int. J. Sci. Res. Comput. Sci. Eng., vol. 12, no. 6, pp. 14–24, Dec. 2024.

Downloads

Published

How to Cite

Issue

Section

License

This work is licensed under a Creative Commons Attribution 4.0 International License.

Authors contributing to this journal agree to publish their articles under the Creative Commons Attribution 4.0 International License, allowing third parties to share their work (copy, distribute, transmit) and to adapt it, under the condition that the authors are given credit and that in the event of reuse or distribution, the terms of this license are made clear.