Fine-Tuning Depth Analysis: Identifying the Sweet Spot for Maximum Accuracy in CNNs

DOI:

https://doi.org/10.26438/ijsrcse.v13i3.703Keywords:

Convolutional Neural Network, Fine-tuning, Transfer Learning, pre-train, Grad-CAM, UnfreezingAbstract

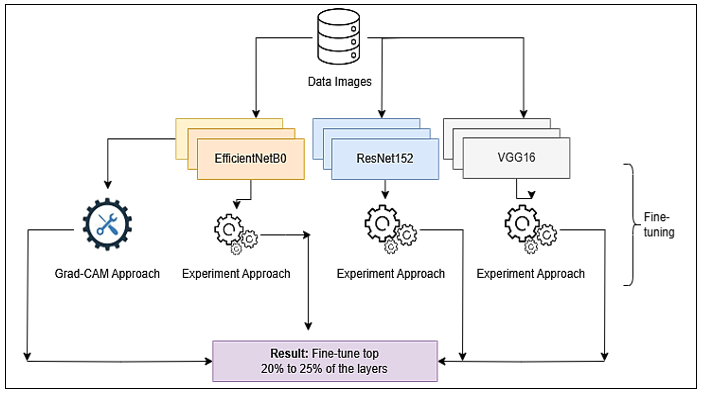

Convolutional Neural Networks (CNN) have transformed the field of computer vision through their exceptional performance in image classification, face recognition, and object detection. Much of its success is due to the development of transfer learning, where pre-trained models trained on large datasets such as ImageNet are fine-tuned (adapted) for new tasks, even when only limited labeled data is available. Fine-tuning involves gradually unfreezing and retraining a pre-trained model to obtain optimal accuracy. However, determining the optimal number of layers to unfreeze remains a critical challenge. Unfreezing too few layers may limit the model's ability to adapt to task-specific features, leading to under-fitting, while fine-tuning too many layers risks over-fitting, thereby compromising generalization on unseen data. This research aims to systematically determine the number of layers to fine-tune in a pre-trained CNN to achieve optimal performance. Grad-CAM and experimental approach, which involves fine-tuning ResNet152, EfficientNetB0, and VGG16 networks, are used. Findings show that unfreezing one-fifth (20% to 25%) of the top layers gives optimal performance and unfreezing too many layers leads to overfitting. However, the VGG16 network requires unfreezing the entire layers for optimal performance because of its few layers (18 layers). Future research can consider other pre-trained networks to ascertain the findings of this study. This research is significant to AI researchers, AI engineers, data analysts, and individuals who build AI systems.

References

I. Kandel, M. Castelli, “How Deeply To Fine-Tune A Convolutional Neural Network: A Case Study Using A Histopathology Dataset,” Appl. Sci., Vol.10, No.10, pp. 3359, 2020. http://dx.doi.org/10.3390/app10103359

Masyitah et al., “A Comprehensive Performance Analysis Of Transfer Learning Optimization In Visual Field Defect Classification,” Diagnostics, Vol.12, No.5, pp.1258, 2022, http://dx.doi.org/10.3390/diagnostics12051258

S. J. Pan, Q. Yang, “A Survey On Transfer Learning,” IEEE Trans. Knowl. Data Eng., Vol. 22, No.10, pp. 1345–1359, 2009.

B. Q. Huynh, H. Li, M. L. Giger, “Digital Mammographic Tumor Classification Using Transfer Learning From Deep Convolutional Neural Networks,” J. Med. Imaging, Vol.3, No.3, pp.034501, 2016.

D. S. Kermany et al., “Identifying Medical Diagnoses And Treatable Diseases By Image-Based Deep Learning,” Cell, Vol.172, No.5, pp.1122–1131, 2018.

A. Hosna, E. Merry, J. Gyalmo et al., “Transfer Learning: A Friendly Introduction,” J. Big Data, Vol.9, pp.102, 2022, https://doi.org/10.1186/s40537-022-00652-w

M. E. Taylor, P. Stone, “Transfer Learning For Reinforcement Learning Domains: A Survey,” J. Mach. Learn. Res., Vol.10, pp.1633–1685, 2009.

A. Karlsson, I. Runelöv, “The Effects Of Fine-Tuning Depth On A Pre-Trained Alexnet Architecture, Applied To Chest X-Rays,” KTH Royal Institute Of Technology, School Of Electrical Engineering and Computer Science, 2021.

A. Gupta, M. Gupta, “Transfer Learning For Small And Different Datasets: Fine-Tuning A Pre-Trained Model Affects Performance,” J. Emerg. Investig., 2020,

J. S. Kumar, S. Anuar, N. H. Hassan, “Transfer Learning-Based Performance Comparison Of The Pre-Trained Deep Neural Networks,” Int. J. Adv. Comput. Sci. Appl., Vol. 13, Vo.1, 2022.

N. Houlsby et al., “Parameter-Efficient Transfer Learning For NLP,” 2020.

G. Vrbančič and V. Podgorelec, “Transfer Learning With Adaptive Fine-Tuning,” IEEE Access, Vol.8, pp.196217–196230, 2020, http://dx.doi.org/10.1109/ACCESS.2020.3034343

K. S. Lee, J. Y. Kim, E. T. Jeon, W. S. Choi, N. H. Kim, K. Y. Lee, “Evaluation of Scalability and Degree of Fine-Tuning of Deep Convolutional Neural Networks for COVID-19 Screening on Chest X-ray Images Using Explainable Deep-Learning Algorithm,” J Pers Med, Vol.7, No.4, pp.213–225, 2020, doi: 10.3390/jpm10040213.

V. Taormina, D. Cascio, L. Abbene, G. Raso, “Performance of Fine-Tuning Convolutional Neural Networks For Hep-2 Image Classification,” Appl. Sci, 10, 6940, 2020 ,https://doi.Org/10.3390/App10196940

M. Amiri, R. Brooks, H. Rivaz, “Fine Tuning U-Net for Ultrasound Image Segmentation: Which Layers,” Electrical Engineering and Systems Science, 2020. https://doi.Org/10.48550/Arxiv.2002.08438

M. Kardinal, “Fine Tuning in the Brain,” Plos Computational Biology, Bernstein Coordination Site, 2015.

F. C. Bartlett, “Remembering: a Study in Experimental and Social Psychology,” 1st Ed. Cambridge, U.K, Cambridge Univ. Press, 1932.

S. Carey, “Conceptual Change in Childhood,” 1st Ed. Cambridge, Ma: Mit Press, 1991.

D. O. Hebb, “The Organization of Behavior: A Neuropsychological Theory,” 1st Ed. New York, Ny: Wiley, 1949.

G. Hatano, K. Inagaki, “Two Courses of Expertise. Cognition and Instruction,” Vol.3, No.4, pp.271-291, 1986.

R. Dhiman, G. Joshi, R. K. Challa, “A Deep Learning Approach for Indian Sign Language Gestures Classification With Different Backgrounds,” In Proc. J. Phys.: Conf. Ser., Vol. 1950, pp.012020, 2021. http://dx.doi.org/10.1088/1742-6596/1950/1/012020

A. Krizhevsky, I. Sutskever, G. E. Hinton, “Imagenet Classification with Deep Convolutional Neural Networks,” In Adv. Neural Inf. Process. Syst., Vol.25, pp.1097–1105, 2012.

R. Norhikmah, A. Lutfhi, C. Rumini, “The Effect of Layer Batch Normalization and Dropout on CNN Model Performance for Facial Expression Classification,” International Journal on Informatics Visualization, 2022. https://doi.Org/10.30630/Joiv.6.2-2.921

R. Yamashita, M. Nishio, R. Do, K. Togashi, “Convolutional Neural Networks: An Overview And Application In Radiology,” Insights Imaging, Vol.9, pp.611–629, 2018. https://doi.org/10.1007/s13244-018-0639-9

C. Szegedy E. T. Al., “Going Deeper with Convolutions,” In Proc. Ieee Conf. Comput. Vis. Pattern Recognit. (Cvpr), pp. 1–9, 2015.

B. A. Alejandro, N. Díaz-Rodríguez, J. Del Ser, “Explainable Artificial Intelligence (XAI): Concepts, Taxonomies, Opportunities, And Challenges Toward Responsible AI,” Information Fusion, Vol. 58, No. 82, 2020.

C. Van-Zyl, X. Ye, R. Naidoo, “Harnessing Explainable Artificial Intelligence for Feature Selection in Time Series Energy Forecasting: A Comparative Analysis of Grad-Cam and Shap,” Applied Energy, Vol.353, 122079, 2024, https://doi.org/10.1016/j.apenergy.2023.122079

R. R. Selvaraju, M. Cogswell, A. Das, R. Vedantam, D. Parikh, D. Batra. "Grad-CAM: Visual Explanations From Deep Networks Via Gradient-Based Localization," IEEE International Conference on Computer Vision, pp.618-626, 2017. 10.1109/Iccv.2017.74

A. Davila, J. Colan, Y. Hasegawa, “Comparison of Fine-Tuning Strategies for Transfer Learning in Medical Image Classification. Image and Vision Computing,” 146, 105012, 2024.

T. M. Adepoju, M. O. Oladele, M. O. Akintunde, A. M. Ogunleye, “Effect of Fine-Tuning Transfer Learning Layers: A Case Study of Breast Cancer Classification,” Fuoye Journal of Engineering and Technology, Vol.9, No.4, pp.660-668, 2024.

X. Zhang, Y. Wang, N. Zhang, D. Dong, B. Chen, “Research on Scene Classification Method of High-Resolution Remote Sensing Images Based On RFONET,” Appl. Sci., Vol.9, No.10, pp.2028, 2019, http://dx.doi.org/10.3390/app9102028

D. Scott, “Cognitive Flexibility,” In Theories of Learning and Instruction,” 1st Ed., New York, NY: Springer, pp.151–182, 1993.

B. B. Folajinmi, E. M. Eronu, S. J. Fanifosi, "Using Hybrid Convolutional Neural Network and Random Forest (CNNRF) Algorithms to Address Non-Technical Losses in Power Distribution," World Academics Journal of Engineering Sciences, Vol.12, Issue.1, pp.01-08, 2025

M. Deepanshu, K. Srinivas, A. Charan Kumari, "Convolutional Neural Network-Based Automated Acute Lymphoblastic Leukaemia Detection and Stage Classification from Peripheral Blood," International Journal of Scientific Research in Computer Science and Engineering, Vol.12, Issue.3, pp.21-28, 2024

Downloads

Published

How to Cite

Issue

Section

License

This work is licensed under a Creative Commons Attribution 4.0 International License.

Authors contributing to this journal agree to publish their articles under the Creative Commons Attribution 4.0 International License, allowing third parties to share their work (copy, distribute, transmit) and to adapt it, under the condition that the authors are given credit and that in the event of reuse or distribution, the terms of this license are made clear.